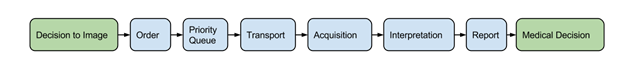

Last October, my team started working on a project to bridge the communication gaps between inpatient general medicine and radiology. Despite having done a full year of internship before starting residency, we quickly realized that as radiologists we knew very little about healthcare is delivered on the wards. Understanding how well the imaging workflow runs from ordering to reporting, identifying possible delays by systematically analyzing patient data seemed straightforward.

A 2-hour meeting, eight weeks of delay, and several email exchanges later, we now rely mostly on manual data collection. This blog post is about what happened.

Data Isn’t Just Big – Data Is Broad

“Big data” is traditionally linked to volume and velocity: How much data is there? How fast is it growing? Compared to Silicon Valley giants, radiology data does not even get close that level of “bigness” even as part of a multibillion-dollar health system.

Data variety is the neglected little cousin of big data because while size can be measured in exabytes and warehouses (or punch cards, if that is your preferred unit of measurement), heterogeneity is difficult to measure.

When data variety in radiology is considered, it is easy to be lulled to think that it primarily refers to the combination of imaging data, unstructured report text, and structured metadata. In practice, data heterogeneity contributes to a unique source of data variety, often creating significant problems either through the duplication or inconsistency of information. In a 2014 article in the journal Big Data, James Hendler presents the concept of “broad data.” He writes:

[T]he “broad data” problem of variety [is] trying to make sense out of a world that depends increasingly on finding data that is outside the user’s control, increasingly heterogeneous, and of mixed quality.

While different datasets may coexist peacefully, real-life use cases do not respect arbitrary data boundaries and may introduce unnecessary barriers. Our project required information integration from both institutional electronic medical records (EMR) and the patient flow database. Although my institution’s data warehouse was designed as a single source of data, whenever a data query crosses database boundaries, the difficulty of integration increases exponentially.

The Dark Side of Standardization

At our institution, the radiology information system (RIS) began as an encapsulated data system which was fully accessible through a relational database schema. As the health system adapted to an institution-wide electronic medical record system (with RIS capabilities), the upgrade paradoxically impeded data analysis. While a domain-specific radiology analyst may understand the nuances of combining imaging orders, examination status, and patient flow across different data sources, the enterprise analyst for the now-standardized EMR took significantly more effort to understand our request.

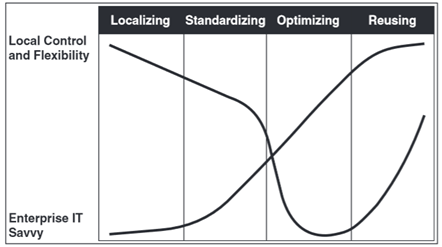

The problem we faced was not unique. Kohli et al in a recent 2015 American Journal of Roentgenology (AJR) article cite the natural pathway of an enterprise becoming “IT Savvy.” Citing concepts from the book IT Savvy: What Top Executives Must Know to Go from Pain to Gain, the AJR authors argue that standardization of enterprise information systems is closely linked to loss of local control and flexibility. The reconciliation of these two sets of needs is what brings an organization to the next steps of the IT evolution to optimize and reuse resources.

The impact of enterprise standardization is not always positive. Source: Fig. 2 in Kohli, Dreyer, and Geis, AJR Apr 2015, reprinted from Weill and Ross, HBR Press 2009

Moving Forward

Kohli et al. suggest that integration efforts by the enterprise often compound the difficulty of achieving these goals for a healthcare system. Radiology faces greater challenges than ever before, delivering imaging insights all the while ceding control to enterprise IT. However, rather than resisting change, IT Savvy suggests that the better solution is to keep moving forward.

For the enterprise, this means solving “broad data” problems. Hendler states that the solution to heterogeneity in massive data sets take place in three steps: (1) discovery, (2) integration, and (3) validation. While natural language processing and machine learning continue to push the edge of medical informatics, basic data integration remains a key tenet of data science.

Radiologists and imaging informaticists should strive to help the enterprise reach optimization and reusability as both milestones help radiology develop and deliver domain-specific knowledge with greater control and flexibility. This creates a new data-driven imaging practice with well-aligned goals and incentives. In the words of English historian Thomas Fuller (or incidentally, Harvey Dent), “It is always darkest just before the Day dawneth.” And the dawn is coming.

This post originally appeared on the Society for Imaging Informatics in Medicine (SIIM) blog.