Follow me on Twitter

My Tweets-

Recent

My Other Posts

Categories

Archives

- February 2024

- February 2022

- April 2019

- January 2019

- May 2018

- April 2018

- November 2017

- May 2017

- April 2017

- March 2017

- January 2017

- November 2016

- October 2016

- September 2016

- August 2016

- July 2016

- June 2016

- May 2016

- April 2016

- March 2016

- February 2016

- January 2016

- December 2015

- November 2015

- October 2015

- September 2015

- August 2015

- July 2015

- June 2015

- May 2015

- April 2015

- March 2015

- February 2015

- January 2015

- December 2014

- November 2014

- October 2014

- September 2014

- August 2014

- July 2014

- June 2014

- May 2014

- April 2014

- March 2014

- February 2014

- January 2014

- June 2013

- May 2013

- April 2013

- March 2013

- February 2013

- January 2013

- December 2012

Category Archives: Technology and Informatics

Machine learning, real opportunites: Dr. Keith Dreyer’s keynote sets tone for ISC 2016

Dr. Keith Dreyer opens with a keynote during the Intersociety Summer Conference (ISC) with description of data science and overview of how machine learning have evolved over time.

He describes that machines and humans inherently see things differently. Humans are excellent at object classification, recognition of faces, understanding language, driving, and imaging diagnostics. Continue reading

Posted in Technology and Informatics

Tagged Artificial Intelligence, machine learning, Quality, Radiology, Value

Five way to keep up coding skills when you are a full time radiologist

Radiologists have a day job (or a night job, depending on your precise definition of “radiologist.”) Many people want to learn the syntax of a computer language, while some want to keep up on existing skills.

If your goals are similar to mine, these might help. Now these are not ways to learn to write code (I’ll write about that later), but ways to brush up on existing skills.

Here are five things to help keeping up your coding skills:

Work on a Project

Most radiology practices can be improved by better use of technology Continue reading

Two Questions for Four Data Visualization Types, and Why It Matters

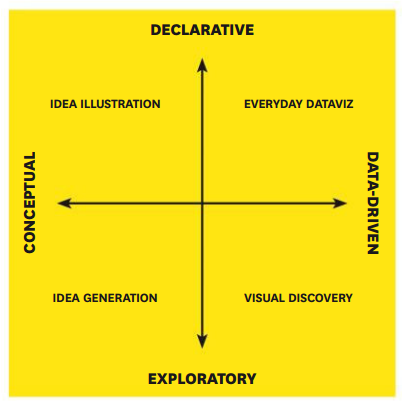

Not long ago, the ability to create smart data visualizations, or dataviz, was a nice-to-have skill. For the most part, it benefited design- and data-minded managers who made a deliberate decision to invest in acquiring it. That’s changed. Now visual communication is a must-have skill for all managers, because more and more often, it’s the only way to make sense of the work they do.

A June 2016 Harvard Business Review article by Scott Berinato discusses the four types of data visualization, in their traditional “boil complex stuff down to a 2×2 matrix” method no less. In short, what works depends on the level of details necessary to convey the purpose.

The overall concepts are reminiscent of concepts by Edward Tufte and his many, excellent, books on visualization.

The HBR article is worth a read for anyone interested in business intelligence, data analytics, or data visualization (which, as Berinato says, is probably a misnomer – it’s not the visualization that matters, but the question it seeks to answer).

Posted in Technology and Informatics

Tagged Big Data, business intelligence, Technology, visualization

Aside

Cognitive computing, along with its technological brethren artificial intelligence and machine learning are wading into the provider space now. IT consultancy IDC, in fact, predicted that by 2018 nearly one-third of healthcare systems will be running cognitive analytics to extract … Continue reading

Password strength – something all radiologists should know

While taking a break from studying for the Core Exam, I stumbled upon this 2016 document from Microsoft about password security (yes, in some circles that is considered “taking a break”).

As radiologists, every day we are being asked to type in some sort of authentication username and password at work. Every other week, we’re asked to change passwords for security reasons. Every month, we forget one of those 23 passwords we’ve created over the past 3 years for the VA or another affiliated hospital, or some software you’ve not used for a while, or even just plain forgot. Continue reading

Posted in Technology and Informatics

Aside

The use of the phrase, “Artificial Intelligence” has exploded within the past few years as the theme of dozens of our most popular movies and television shows, magazines, books, and social media. This is despite the difficulty that many experts … Continue reading

Aside

The May 2016 iteration of FHIR… has arrived. Most notable among its new capabilities: support for the Clinical Quality Language for clinical decision support as well as further development of work on genomic data, workflow, eClaims, provider directories and CCDA … Continue reading

The Paradox of Standardizing Broad Data

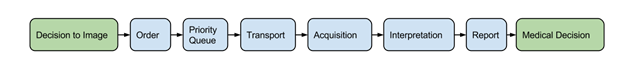

Last October, my team started working on a project to bridge the communication gaps between inpatient general medicine and radiology. Despite having done a full year of internship before starting residency, we quickly realized that as radiologists we knew very little about healthcare is delivered on the wards. Understanding how well the imaging workflow runs from ordering to reporting, identifying possible delays by systematically analyzing patient data seemed straightforward.

A 2-hour meeting, eight weeks of delay, and several email exchanges later, we now rely mostly on manual data collection. This blog post is about what happened. Continue reading

Programmable DNA Circuits Make Smart Cells a Reality – Sort of

… and imagine if you could program life itself. Rather than 0’s and 1’s, you have four possibilities, a computing system performing quaternary arithmetics.

I still remember being dazzled as a freshman in college, during the first computer science lecture. The professor spoke of quantum computers, where improvements in speed of calculations can be measured in squaring time 2n rather than the traditional doubling time (i.e. Moore’s law) 2n. And there was biologic computing, using simple building blocks of genetic material ACTG to perform calculations which take place in living cells.

Then, I spent the 15 years that follows writing them off as science fiction, pontifications of an old man.

I was, of course, wrong.